Before we begin, just a quick reminder that this substack was renamed from Phenomenology, East and West to Mind Your Metaphysics earlier this week. Nothing is changing about the content, but I hope this new name explains my work better. You can read more about the name change here.

We are about 3 years into the Large Language Model (LLM) craze: back in 2022, ChatGPT released into public access and it was quickly celebrated as a massive advance in AI development. Here was a program that could produce novel strings of natural language that were grammatically correct. Journalists and tech company CEOs alike heralded this as the beginning of a brave new world, and those who believed in the power of AI to determine our future—whether for good or ill—saw this as a critical moment of acceleration towards The Singularity, that techno-eschatological horizon in the not-to-distant future when, some hoped/feared, we would be absorbed into a super-intelligent super-computer—like The Matrix, but with a better UI.

The hype for this new technology has continued into the present, though there are increasing signs that it may not be all it was cracked up to be: the newest release of ChatGPT (4.5) takes many times as much computing power to answer queries, and yet evidence shows it still produces major errors frequently. Likewise, the constant threat of so-called “hallucinations”—when the LLM produces grammatically correct sentences that are full of false information—is just as much a problem today as it was three years ago. For a certain kind of early-adopter, though, LLMs continue to be the cutting edge, the technology that will deliver us to our techno-utopia, just a few years away.

The real goal of this development, though, is not just better LLMs, but something called Artificial General Intelligence (AGI). AGI is a proposed form of machine-learning powered application that could not only produce natural language sentences, as LLMs do, but could engage in any kind of reasoning or problem solving whatsoever. The idea and goal of LLM development, really, is to use what is learned in LLM production to learn how to produce a genuine AGI.

Now, the debate as to whether AGI is possible, what kind of resources it will take to create, and what pathway or pathways would be best in order to achieve it, are topics of debate all over the internet. Gary Marcus, here on Substack, is a pretty good place to begin if you aren’t familiar with this discourse but want to start. But I, gentle reader, am but a humble amateur philosopher and theologian—not a programmer or a computer scientist. I have no insight to offer on the hardware or software specifics of LLMs, potential AGI, or anything else.

But there is a problem with how LLMs work that receives little attention, especially within the AI hype-erverse. Here’s the thing: human natural language is comprised of two major parts: syntax and semantics.1 Syntax is how the words are ordered and declined, what rules govern how words interact with each other. For example, though “Man bites dog” and “Dog bites man” have exactly the same words in them, they obviously mean two very different things. It’s syntax that delivers this difference in meaning. Now it’s important to note that how syntax works varies widely among languages. English is relatively unique among major languages in that very few of our words decline; that is, our nouns and adjectives basically stay the same no matter what job they are doing in the sentence. But in many other languages, a word will be modified depending on what role it is playing. We do have this feature in English, mostly with pronouns: we use “I” in the nominative case, but “me” in the accusative and dative cases, with “my” generally being used in the genitive.

But English basically never declines any nouns or adjectives, and especially not proper nouns. This does mean that word order is critical in understanding English, as the above examples show: the only reason we know that “man” is in the nominative (subject) case in the first sentence and that “dog” is in the accusative (object) case is because of the order they are in. Neither word changes form in the second sentence, only their position does.

Other languages, such as Latin and Russian, feature a huge amount of declension, though, with basically every noun and adjective having a different ending depending on its role in the sentence. One of the interesting effects this has on both languages is that word order actually doesn’t matter very much, because the differing endings on each word tell the listener or reader what role the word is playing. So, one Latin translation of “Man bites dog” is “Homo canem mordet”, while “Dog bites man” can be rendered “Canum morsus hominem”. Notice that Man is Homo in the nominative, but hominem in the accusative.2 This does mean that technically, you could re-order that sentence without losing any meaning, since the word endings themselves give you the necessary syntactical information. Even so, these endings provide syntactical information.

LLMs are all about syntax—there’s a reason that David Girard refers to them as “spicy autocomplete”. LLMs basic function is to predict what word should come next in a sequence based on the statistical probability: LLMs are “trained” on a truly gargantuan collection of texts, so they simply go and look at previous examples of the current string of words they are working on (or find the closest matches) and add up which word was most likely to come next. And then they just do that, over and over. It turns out that if you have enough data from a given language, this does allow you to put together grammatically correct strings of words, nearly all the time.

Now, I am obviously simplifying this, as the code that generates this is complex, and there are all sorts of secondary functions that allow the output to be improved. One of the most important is the process of “weighting”: consider, for example, how many diverse kinds of responses one could offer to the question, “what is your favorite color, and why?” It’s true that the first word of the response will almost certainly be a primary color, but after that, the diversity of words will get extremely high. If an LLM has a huge cache of data, but there are thousands of different kinds of responses, and none of them are actually very statistically likely, what should it do?

This is where LLMs go through further “training”. Humans input questions to the program, and when the response is generated, they basically grade the response. A response that that particular person thinks is good response gets a good grade, and a bad response gets a bad one. This new data is then input as a “weight”; the LLM now prefers to offer the kind of responses that got better grades from these human users.

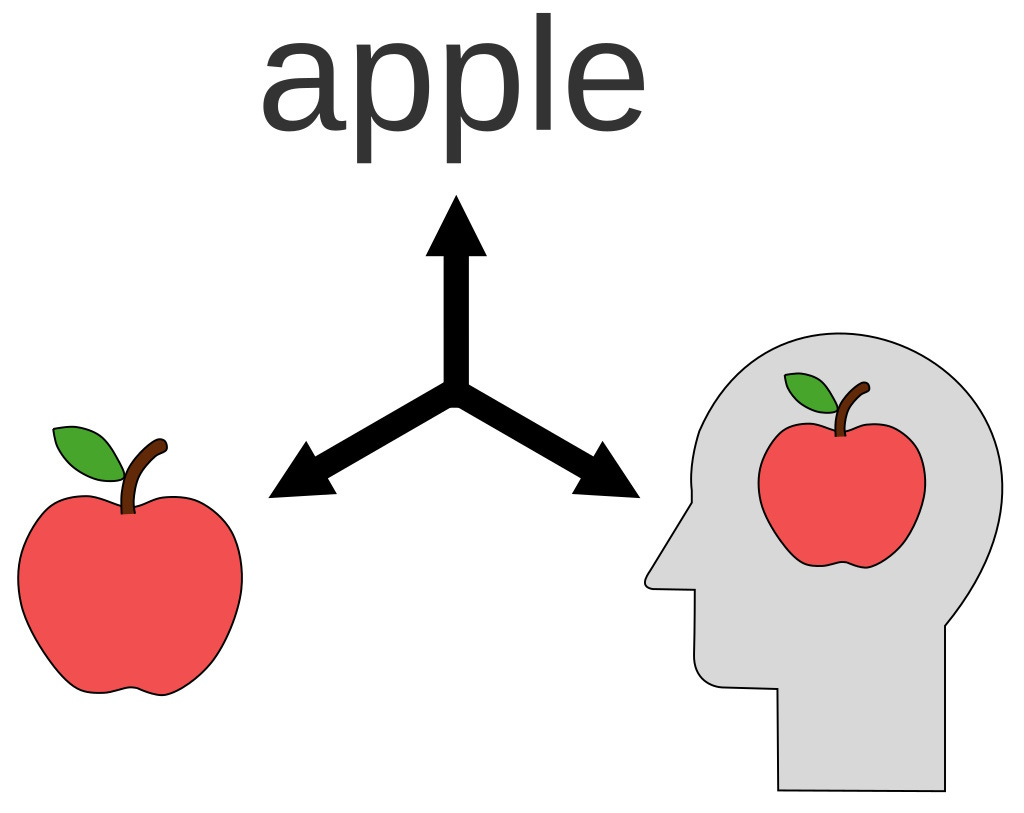

But it’s important to keep track of what is going on here. Remember that I began by saying that language is composed of two major factors: syntax and semantics. We’ve been talking about syntax for a while now, but semantics hasn’t entered the discussion. And indeed, that’s for the simple reason that LLMs have no semantic register whatsoever. Despite having “language” in their name, they are missing half of what makes language work.3

While the word “semantic” in everyday speech is often used pejoratively—“that’s just semantics!”—semantics is in fact crucially important to language, the way you view the world, and how you communicate. Semantics simply refers to the meaning of a given word. So, English users know that “dog” refers to that class of generally furry, generally four-legged mammals who (generally!) like to wag their tails and lick faces (among other things). While syntax governs how the word dog interacts with other words, semantics tells us what the word itself refers to in the real world.

So, to state the obvious: without understanding the semantic register of the words in a sentence, you can’t understand the sentence at all. If I tell you that “Glorb frulged the Plorgar”, your mind will likely put together that Glorb and Plorgar are nouns and that frulg is a verb. But you definitely won’t have any idea what the sentence means, much less whether it is true.

This is how LLMs interact with language. Dr. Emily Bender offered an excellent (if whimsical) analogy here: imagine there is a very intelligent octopus living on the seafloor, and a communication cable is laid between two islands such that it sits right next to the Octopus’s den (or nest, or fortress, or whatever you call an octopus’s lair. Lair! That’s a good one). Let’s say the octopus is able to tap into the communication cable (it’s very intelligent) and listen in on what is being said. Obviously, the octopus doesn’t speak any human languages (even if it’s mastered every dialect of Octopus, Squid, and Cuttlefish) and so can’t understand what is being said. But, remember this thing is wicked clever. Over time, it notices patterns in speech, the way certain sounds tend follow other sounds. After it listens enough, it might be able to reproduce a sentence in the human language that is grammatically correct and might even seem meaningful—even though the one speaking it has no idea what the words they are saying mean.

In other words, LLMs are simply matching patterns. They have no data whatsoever what the words in those patterns refer to, or even that they refer to anything at all. Dr. Bender has often referred to them as “stochastic parrots” (she seems to really like animal analogies):

Stochastic means (1) random and (2) determined by random, probabilistic distribution. A stochastic parrot (coinage Bender’s) is an entity “for haphazardly stitching together sequences of linguistic forms … according to probabilistic information about how they combine, but without any reference to meaning.” In March 2021, Bender published “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” with three co-authors. After the paper came out, two of the co-authors, both women, lost their jobs as co-leads of Google’s Ethical AI team. The controversy around it solidified Bender’s position as the go-to linguist in arguing against AI boosterism.4

In other words, LLMs use statistics to guess at word order, but completely lack any semantic understanding of the words they use. And this is true even if you prompt an LLM, asking it to define a word and get it to offer a definition such as “Dogs are furry, four-footed mammals who wag their tails.” Even when the LLM offers a definition, it doesn’t know that this is what it has done. It only knows that this sequence of words is an acceptable response to the sequence of words you prompted it with. So even if you next prompted it by asking “did you just give me a definition of the word ‘dog’?” and it responded “yes, I did”, it wouldn’t realize that it had done so, or that its statement was true. All it “knows” is that these are acceptable strings of words to offer after you submit your strings of words. But this is not how actual linguistic communication works; no actual communication is happening here.

This simple fact is extremely important to keep in mind, for a number of reasons. For one thing, this lack of semantic register means that LLMs have absolutely no way of knowing whether the sentences they are producing are true. Indeed, they have no knowledge of what “true” even means. This obviously has massive ramifications for any effort to develop an AGI that can actually reason about the real world.

Secondly, there are AI boosters who were quick to push back against Dr. Bender’s assertion, not by denying it, but by arguing that humans were really no different. Sam Altman, the head of Open AI (which produces Chat GPT) famously tweeted "i am a stochastic parrot and so r u. [sic, sic, and sic]"5

But, dear, gentle, anxious reader, this is a claim you can check for yourself—no Master’s Degree in Computer Science from Stanford required! Here’s how you do it:

Think of a word. Any word will do, but a noun or a verb will be easiest.

Now, ask yourself, do you know what that word means?

If you do know what that word means, congratulations! You are not a stochastic parrot.

Now Mr. Altman himself alone knows whether he actually believes that humans are really stochastic parrots, or whether this was simply a marketing gesture. After all, he owns a lot of stock in his company, and he stands to make a lot (more) money if other people perceive it as valuable. If, then, he is able to convince us that LLMs actually do have all the language skills that humans have, or at least can potentially, that would likely lead to an increased valuation of OpenAI, and a lot more money in his (offshore, to be sure) bank account.

But if Altman does believe that humans are stochastic parrots, this is alarming, for at least two reasons. One, it would mean that the head of the largest “AI” company in the world actually doesn’t understand how language works, and two, that his view of humanity is so mechanical and utilitarian that he’s essentially blind the reality of human consciousness. It is no small thing to consider that many AI fans have argued that ChatGPT either is or is becoming conscious—that is, has an inherent phenomenal sense of self. And they draw this conclusion from the power of Chat GPT to correctly order sentences, even though everyone should know that it doesn’t understand what they mean.

It’s important to remember that while the definition of any word will always simply be a new string of words (which does not include the word defined), when we talk about the meaning of a word, we mean much more than this: for us humans, for example, “furriness” is not just another syntactical datum, but is associated with certain tactile experiences and memories. To say that dogs are (generally) furry is not just to associate two words, but nearly always to speak from the experience of, say, petting a dog. This is a crucial aspect of human language usage: for us humans, language is used to express conscious qualities that themselves are fundamentally pre-linguistic.

Now, this is where fans of LLMs and AI more generally will want to push back. They will argue that concerns around meaning are misplaced; instead, on their view, all that matters it the production of behavior. If the LLM can generate a sentence which a competent reader of the language can understand and make meaning of, then, on this account, the LLM is successfully using language, period. And this is where the history of philosophy of mind starts to weigh heavily on the conversation, despite the fact that many in the conversation may not realize this.

The long period in analytic philosophy in which “eliminativism” was prevalent in philosophy of mind was marked by the adoption of the psychological perspective of behaviorism (or behaviourism, if you’re nasty). Behaviorism posited that psychology should not concern itself with inner states of thought or phenomenality, but should instead simply measure stimulus input and behavioral output. So, for example, if you gave a food pellet to a goat every time it pressed on a button, and it eventually stood around all day and just pressed that button, you needn’t consider what it felt like for the goat to be in this state, you just needed to understand that the goat’s neurological and nervous system was configured such that it would engage in whatever behavior maximized its access to food. This system could be described and even explained purely through the means of input/output, stimulus and behavior.

Obviously, such a schema was really useful for psychologists who could definitely measure such empirical inputs and outputs but who could not measure internal qualitative states. It’s not hard to believe that one of the driving motivations for behaviorism was simply that it was a theory which it was easy to test. I certainly think this is a case where epistemic limits were translated into ontological determinations: that view of reality which was methodologically easiest to measure was reified into existence.

Be that as it may, behaviorism was not only a useful oversimplification for psychologists, it promised to be an extremely useful oversimplification for philosophers of mind who really wanted to assert the reality of a strict materialism (the aforementioned eliminativists): if we could regard mental acts solely on the basis of what behaviors were generated by the neurological system, then we could simply chop off the question of consciousness and ignore it—which, as it turned out, was really convenient, as this group of philosophers has still never provided even the beginning of a materialist explanation for phenomenal consciousness. Again, notice how method is used to excise difficult questions instead of laboring to answer them.

Now, these days, eliminativism has slowly declined in popularity, both inside and outside the academy, and various forms of epiphenomenalism are much more in vogue among materialist philosophers. Hence all the talk of AIs “becoming” conscious as their code is made ever-more complex. I have already discussed the basic metaphysical and rational problems of epiphenomenalism, and won’t dive into that topic here so that I can keep this post from getting too ridiculously long.

But the main thing to keep in mind is that the problem with LLMs isn’t that they aren’t conscious (they either definitely aren’t or kind of are but only in the sense that everything is, depending on your metaphysics and how much you take panpsychism seriously). The problem is that they have no semantic register. Now, it doesn’t seem to me that there is any reason that a purely synthetic computing system couldn’t have a semantics. Brains, after all, presumably store semantic information, and brains are just as physical as computers (even if they work rather differently). My claim is certainly not that a computer can’t function semantically (such a claim would be well beyond my paygrade, anyway),6 but is rather the much more straightforward empirical point that LLMs, as they are currently designed, don’t actually have any semantic function.7

I will leave it to people who know more about contemporary coding to discuss why this is the case, but I think it’s extremely noteworthy that, as OpenAI, Microsoft, Google, and even the-company-formerly-known-as-Twitter8 have poured hundreds of billions of dollars into LLMs, but none of them have tried to give these LLMs any semantic ability—despite the fact that a 101 level Linguistics class would have taught them how critical semantics are to language (actually, a kindergarten class would have, as well). Is it that adding semantic capability is much harder than coding for syntax? Is it that genuine semantics could only arise from true empirical interaction with the world, such that the LLM would need sensing systems (cameras, microphones, etc.) of its own? Or it is some other reason?

I have no idea. But it certainly seem that if someone really wanted to generate a program that could use language as well or even better than humans, this would be the problem they would be pouring their time, energy, and money into: how do we code for semantics? Yet, at least with LLMs, no one seems to be doing this. OpenAI’s most recent release of ChatGPT simply had more data made available to its statistical syntax generator. But more clarity on syntax won’t deliver semantic comprehension, just as adding more fuel to your gas tank won’t fill your tires with air. Sometimes, what is needed is not just more, but something different.9

And this, after all, why humans are brought in to offer the “weights” we discussed above: having a human basically tell the LLM whether a given response is relatively good or relatively bad is a way of smuggling a bit of semantic data into the system. The problem, of course, is that though the LLM may “know” that one pattern of response is better than another, it doesn’t know why—and as any teacher will tell you, real learning and intelligence only arrives once a student grasps the reason that an answer is correct: “show your work!” That’s how we are able to generalize our learning from a small set of examples to any possible problem of the given type.

As I’ve suggested, this fundamental absence at the heart of LLMs has philosophical, and ethical, as well as technological and practical, implications. But I want to conclude with the obvious: without semantics, LLMs cannot be considered even provisionally intelligent. And without semantics, there’s no conceivable way that an LLM could somehow be morphed into a genuine AGI. There are a lot of people who have a lot of money invested in us not realizing this. But as we try to grapple with how LLMs and “AI” more broadly will impact our future, I think this is worth keeping in mind.

Both could also be housed under the broader field of semiotics, the study of signs.

At least according to Google Translate; I don’t speak Latin! And so, for example, I’m not sure why the verb conjugation changed here…go ask David Armstrong to verify if you are suspicious.

David Bentley Hart’s All Things Are Full of Gods sits on my shelf, still unread. But if Alec Worrell-Welch’s review of Hart’s text is right, then he makes this point about semantics in that book as well. If so, I am happy to have such illustrious company!

“You Are Not a Parrot And a chatbot is not a human. And a linguist named Emily M. Bender is very worried what will happen when we forget this” in the New York Magazine: https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html. And please don’t allow the perverse irony that Google fired people from an ethical team for speaking their minds roll past you without further consideration…

Though Dr. Smith-Riu over at the Hinternet is even more dubious: ““AGI” is Impossible”

Of course, arguing that a physical system can have a semantics is not the same thing as arguing that a physical system can give rise to consciousness. As I have suggested many times on this substack, my own view is at least adjacent to a monist/idealist one: consciousness is metaphysically prior to matter.

i.e. “X”.

The aforementioned Gary Marcus has been making this point for over 20 years, as it happens: Breaking: OpenAI's efforts at pure scaling have hit a wall

Neural nets have a kind of "space" - areas of which are activated in response to input. For example, asking an LLM for "the opposite of small" will trigger areas relating to smallness and largeness.

This is true regardless of the language - input is received, triggering some feature space, and then is turned into output.

Researchers found that the very same areas are triggered regardless of the language. "Small" in any language is mapped on to the same area of the LLM.

Is this because "small" in every language has similar enough syntax to map to the same place in the LLM? That doesn't make sense to me. It seems more likely that "small" and "large" have the same semantics across languages, and LLMs have picked up on this, creating a semantic map that can preserve the meaning within language.

Hey — really appreciated your walkthrough of LLM syntax vs. semantics. I’ve been working in a parallel lane, not from the linguistics side but from a systems design perspective. My framework (“NahgOS”) treats collapse not as failure but as a structural event — and I’ve been building scrolls around how recursion, drift, and framing shape meaning in generative systems.

You can read the full set here: [https://nahgos.substack.com/p/the-shape-feels-off-paradoxes-perception?r=5ppgc4]

Start with Scrolls 1–4 if you want the philosophical base. Scrolls 13–17 are where it turns runtime.

We’re asking the same questions — I just took a different route through the paradox.

Would love your thoughts if you ever dig in.